The Origin of Information

A really good explanation for why information in its quantitative sense is neg-entropy.

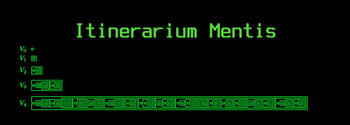

Consider the following case. Let someone, Alice, without knowing the location of \(P\) be informed that anyway it is in \((A,B)\), and let's normalize the information about the location of \(P\) that Alice possesses to be \(0\). Now let she ask questions that can be answered with “yes” and “no” to narrow down the possible location of \(P\). Then the best strategy she can use is the classic Bolzano-Weierstrass approximation:

- Divide \((A,B)\) into half (geometrically we can make a paper copy of bar \(AB\) and fold it to locate its center) and ask whether \(P\) is on the left of the central line, or on the right - is it on the right? Yes or No. In our diagrammatic example, \(1L\) means it is on the left.

- Then divide the left of \(1L\) into half and repeat. In our case, \(2R\). Repeat.

In the fourth bit we have narrowed the location of \(P\) to be inside \((a,b)\). If we assume that “left” is represented by \(0\) and “right” by \(1\), \((a,b)\) is then represented as \(0110\).

In the definition of information theory, only the number of question asked matter. We have asked \(4\) questions, so we have \(4\) bits of information. We have chosen out of \(2^4=16\) equal partitions of \((A,B)\), the partition \((a,b)\).

This is all quite standard. Now let's get into physics. Suppose that \((A,B)\) is a cold metal bar, and \((a,b)\), indicated by color red, is suddenly heated. Then the temperature of \((a,b)\) should increase. If we know that \(P\) is in that interval of the metal bar where the temperature is high, then we can ask an equivalent question “is the interval where temperature is high on the left of the central line or not”, and repeat. But if due to the Second Law the metal bar approaches equilibrium and the temperature becomes uniform - that is, when the entropy increases - the information of the heated interval \((a,b)\) vanishes, and we can no longer single out \((a,b)\) by asking questions regarding the temperature of the metal bar.

By the way, when I was writing about this, it suddenly became clear to me, again, that intuitionists are kind of meta-mathematicians, not in the sense of mathematical logic, but by trying to make everything clear: \((a,b)\) might “exist” in an ideal world, but that's irrelevant because to single out \((a,b)\) constructions (or anything similar) still need to be made.

Notice also that “Information”, defined quantitatively, is still operational and relies on an agent to perceive meaning.

Further we have Landauer's principle. Let's for the moment skip that.

If “no operation by a machine on a message can gain information,” and if, on the other hand, “there is no reason . . . why the essential mode of functioning of the living organism should not be the same as that of the automaton,”2 then where does information come from?

As usual cyberneticians will try to explain by saying that it is pure chance. Well. But they may still deny that the Second Law is violated, since when information is extended (distributed, communicated), energy is also consumed, and that increases entropy; the two balance out. Again, the entropy of the whole universe increase, even if locally the entropy of a local system might decrease, due to fluctation.

Such is, in sum, the solution proposed by a multitude of contemporary scientists: Erwin Schrodinger, Harold F. Blum, Pierre Auger, Norbert Wiener, and Joseph Needham. It is not essentially different to the old theory of Herbert Spencer, who also “explained” the evolution, that is to say the appearance, of structures, by coupling an integration of matter and a concomitant dissipation of motion.

Refutation of the solutions proposed#

General#

There are some errors in Ruyer's argument since we still have the notion of information compression, etc., but the overall idea is unproblematic. Basically, the structure that is inherent in information isn't taken into account when information is quantitatively measured, a perfectly legitimate and meaningful chunk of information, say, a code written in rust, while highly structured, may not be meaningful to a medieval scholar, and may not really be distinguishable from a chunk of gibberish. “The measure of information, like all measurement, must be made intelligently and relative to a certain mental context” - things only emerge internal to a broadly speaking logical framework. The point is also often made clear in textbooks of information theory, but people seem not to take it seriously.

“The supposed initial state of the universe, with free energy maximized and entropy minimized, represents a homogenous order and not a structured order.” Minimal entropy, or minimal Kolmogorov complexity; the latter is better to illustrate, since the sequence \(00000\ldots\) has an entropy as small as possible.

“A man whose job is to write letters may be assisted in his work by a secretary, or by a guide to commercial correspondence, but he is not sensibly aided by increased nutrition and a flow of “free energy” that passes into his system. ” Another quite silly bue equally precise example.

Schoedinger, Blum, etc.#

Who tried to explain structural information itself by some “mutation” or fluctation retained and reproduced indefinitely. For this recent progresses in biology, in particular experimental embryology (developmental biology, namely), we have Michael Levin's experiments:

Keep in mind a crucial point: in all these experiments, the genes of the worms are never edited. You get a wildly different functional worm with the same genes. And what’s even wilder is that some of these changes are enduring: without any further drugs or modifications, the two-headed worm produces offspring that are also two-headed, indefinitely.

The Origin#

A world of possibilities is again assumed, together with the general theme of emanation:

It is not human consciousness that brings artistic or technical themes into existence, any more than it truly creates the work. Human consciousness is the medium between the world of possibilities and the world of things.

This I really don't like. It's a problem of metaphysics, or meta-metaphysics: as Ruyer himself puts it:

what difference is there between preformation and epigenesis if epigenesis is conceived as a preformation in

which the form exists in advance, not in space, but in a trans-spatial world?

And I don't see him really solving the problem. The problem is a metaphysical one, and is even a meta-metaphysical one, since what constitutes a solution to the problem itself is unclear. What do we want to know? What should be seen as a solution?